Advanced Manufacturing Innovations: Exploring Smart Technologies and Their Industrial Applications

The advent of smart manufacturing technologies marks a revolutionary shift in industrial operations, establishing the infrastructure of the ‘smart factory.’ At the heart of this transformation is the synergy of manufacturing automation software, sophisticated data analytics, and seamlessly integrated systems. These innovations are pivotal in facilitating instantaneous decision-making, streamlining efficiency, and minimizing waste, thereby redefining the essence of manufacturing processes.

These technologies have made a significant impact across a wide range of sectors. For example, BMW has seamlessly integrated over 200 AI applications into its production line, aiming to diminish repair needs and mitigate waste.

In the aerospace industry, giants like Boeing are leveraging automation technologies with the aim of achieving substantial reductions in the processing time for specific components—although the claim of a tenfold reduction might seem optimistic, the intent underscores a significant push towards enhancing efficiency through advanced manufacturing technologies.

These leading-edge smart manufacturing tools are redefining the landscape of traditional production methodologies, ushering in a new era characterized by remarkable efficiency, productivity, and adaptability. Our article delves into the essence of smart manufacturing, its profound impact on a variety of industries, an overview of the ten pivotal technologies driving this evolution, and the upcoming trends that are set to further shape this dynamic and exciting field.

About Advanced Manufacturing

Smart manufacturing embodies a transformative leap in industrial operations, introducing a suite of advanced technologies that redefine traditional manufacturing processes to enhance efficiency, adaptability, and sustainability. This innovative approach is centered around the concept of the smart factory, a highly interconnected and intelligent environment where devices, sensors, and systems collaborate seamlessly to optimize production. Termed variously as Industry 4.0, Digital Manufacturing, and Advanced Manufacturing, this shift heralds a new era in manufacturing where digitization and data analytics inform decision-making processes, offering real-time insights into production dynamics.

At the core of smart manufacturing is the Internet of Things (IoT), which establishes a network of sensors and devices that collect and exchange data, enabling machines and equipment to communicate with each other. This interconnectedness is crucial for streamlining manufacturing processes. Artificial Intelligence (AI) and Machine Learning (ML) further augment this ecosystem by analyzing the vast amounts of data generated, predicting equipment failures, optimizing production schedules, and enhancing quality control. Such predictive analytics transform manufacturing operations from reactive to proactive, ensuring greater efficiency and minimal downtime.

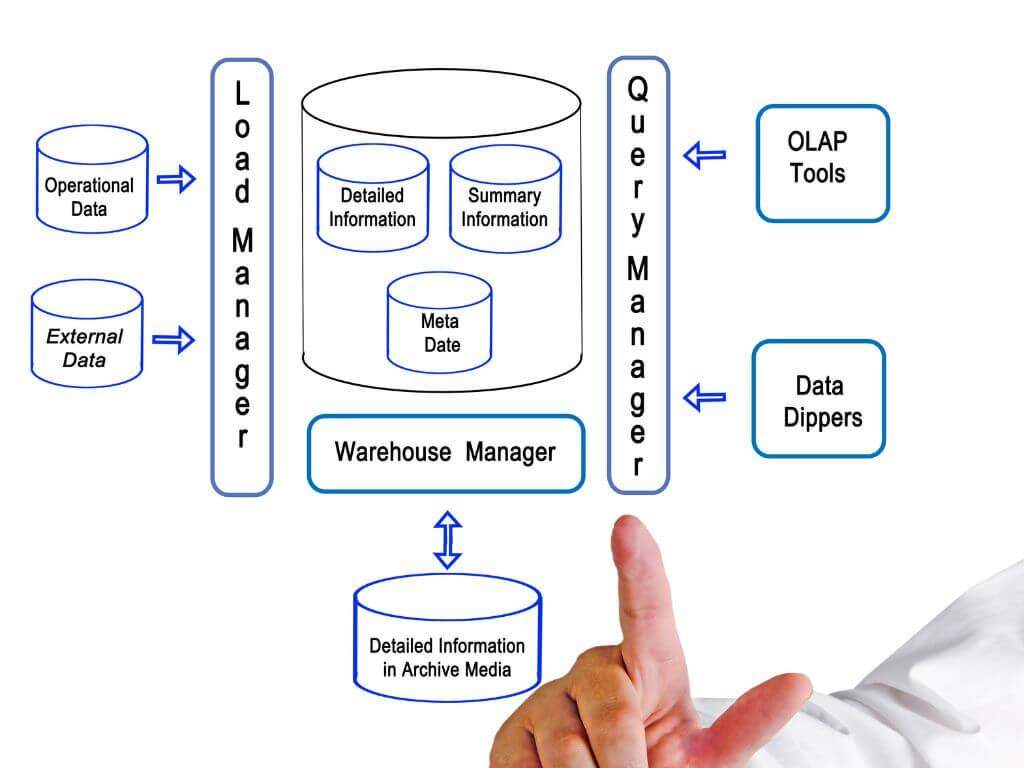

Cloud computing plays a pivotal role by providing the necessary scalable storage and computing resources to handle the extensive data analysis and decision-making requirements in smart manufacturing. This technology supports the integration and processing of data across disparate locations, facilitating a cohesive operational framework for global manufacturing enterprises. Big Data Analytics complements these technologies by processing large sets of data to identify patterns, trends, and insights, which are instrumental in driving efficiency and strategic decision-making within manufacturing operations.

The adoption of Robotics and Automation signifies another significant milestone in smart manufacturing, introducing robots and automated systems that perform repetitive tasks with improved precision and productivity. This not only enhances the quality of manufacturing processes but also promotes safety by minimizing human error. Augmented and Virtual Reality (AR/VR) technologies offer additional benefits, providing immersive training, remote assistance, and product design visualization capabilities that bridge the virtual and real worlds, enabling more effective planning and execution of manufacturing operations.

The cumulative impact of these technologies manifests in numerous benefits, including increased productivity through optimized resource utilization and accelerated production cycles. Quality is notably enhanced via real-time monitoring, which facilitates the early detection and correction of defects. The flexibility of smart factories also means they can swiftly adapt to changing market demands and customer preferences. Predictive maintenance, enabled by AI, reduces equipment downtime, while mass customization allows for the efficient tailoring of products to specific customer needs. Furthermore, the optimization of manufacturing processes leads to reduced operational costs and waste, contributing to greater sustainability through more efficient use of resources and energy.

Smart manufacturing is not just an incremental improvement but a radical reimagining of how production is conducted. It signifies a strategic shift towards more responsive, efficient, and sustainable manufacturing practices across diverse industries, from automotive and aerospace to consumer electronics and construction. The integration of smart manufacturing technologies is demonstrative of the industry’s commitment to innovation, efficiency, and sustainability, paving the way for a future where manufacturing processes are not only more intelligent but also more attuned to the demands of a rapidly evolving global market.

Navigating the Smart Factory Evolution: A Journey Through Data and Technology

The transformation of traditional manufacturing into smart factories represents a significant leap forward in industrial efficiency and productivity. This evolution is not just about adopting new technologies but about fundamentally changing how data is used to drive decision-making and optimize operations. The journey through the levels of smart factory evolution reveals a strategic pathway from basic data collection to fully autonomous manufacturing ecosystems. Let’s delve into the critical stages that mark the progression of a smart factory.

At the outset, Level 1: Basic Data Availability lays the groundwork for the smart factory. This initial phase is characterized by the installation of sensors and IoT devices across the manufacturing landscape. These tools are pivotal in gathering essential data from machinery and processes, marking the first step towards digitization. However, the data collected at this stage is often isolated in silos and requires manual effort to analyze and interpret. Despite these limitations, the availability of data is a crucial starting point.

Progressing to Level 2: Accessible Data, the focus shifts towards integrating disparate data sources and enhancing accessibility for all relevant stakeholders. By implementing Manufacturing Execution Systems (MES) or Enterprise Resource Planning (ERP) systems, data is centralized, facilitating easier access and paving the way for more informed decision-making. Although analysis at this stage still relies heavily on human effort, the establishment of a cohesive data infrastructure is essential for further advancements.

Level 3: Proactive Analytics marks a significant leap towards automation and intelligence in manufacturing processes. This stage introduces big data analytics tools and basic machine learning algorithms that enable the factory to proactively address potential issues and optimize operations. By recognizing patterns and predicting equipment failures before they occur, manufacturing processes become more efficient and less prone to disruptions.

The culmination of the smart factory evolution is Level 4: Action-Oriented Data (Self-Optimizing Factory). This phase represents the fully realized vision of the smart factory, where advanced AI, machine learning, cloud computing, and robotics converge to create an autonomous manufacturing environment. Here, processes self-optimize in real-time based on continuous data analysis. Machines communicate directly with each other, adjusting production autonomously with minimal human intervention, thereby achieving unparalleled efficiency and resilience.

It’s important to note that transitioning through these levels is an evolutionary process rather than a revolutionary jump. Each stage builds upon the last, requiring a solid foundation of data quality and integrity to ensure successful implementation of more advanced technologies. A robust Level 2, for example, is crucial for generating the comprehensive data needed for the effective application of AI and machine learning at Level 4.

The evolution towards a smart factory is both a technical upgrade and a strategic transformation in utilizing data to enhance business performance. From the initial steps of data acquisition to the sophisticated decision-making enabled by advanced analytics, each stage in the evolution of a smart factory brings manufacturers closer to achieving a highly efficient, responsive, and intelligent production environment. As industries continue to navigate this journey, the promise of increased productivity, reduced costs, and enhanced product quality becomes increasingly attainable, heralding a new era of manufacturing excellence.

Unlocking the Potential of Smart Manufacturing: Key Technologies Transforming Industry

The landscape of manufacturing is undergoing a seismic shift, driven by a suite of advanced technologies that promise to redefine the industry’s future. This transformation is anchored in the rise of smart manufacturing environments, where digital and physical processes converge to create more efficient, sustainable, and adaptable production lines. Let’s explore the pivotal technologies at the heart of this industrial revolution and their impact on the manufacturing sector.

The Connective Tissue: IoT Integration

At the foundation of any smart factory lies the Internet of Things (IoT). This network of sensors, actuators, and smart devices embedded within machinery and across production environments is crucial for real-time data collection. It’s this data – covering machine performance, energy usage, environmental conditions, and inventory levels – that fuels all other advancements in smart manufacturing. The implementation of IoT technologies enables predictive maintenance models, such as those adopted by Siemens, reducing downtime by 30% and streamlining production flows with enhanced inventory tracking and management through RFID tags.

The Brains Behind the Operation: Data Analytics and AI

Artificial Intelligence (AI) and data analytics stand as the cornerstone for interpreting the vast streams of data generated by IoT devices. These technologies enable predictive maintenance, quality control, and process optimization, transforming raw data into actionable insights. IBM’s AI solutions, for instance, have improved equipment reliability by up to 30% for some clients. Additionally, Generative AI and computer vision systems are pushing the boundaries of design and quality control, optimizing production lines with unprecedented precision.

Enhancing Visualization: Augmented and Virtual Reality

Augmented Reality (AR) and Virtual Reality (VR) technologies are revolutionizing training, maintenance, and design in manufacturing. Boeing’s use of AR for wiring harness assembly, which has resulted in a 40% increase in productivity, exemplifies the profound impact of these technologies. Similarly, Ford’s use of VR for employee training enhances safety and efficiency, illustrating how AR and VR are making complex processes more accessible and safer.

Revolutionizing Production: Additive Manufacturing and 3D Printing

Additive manufacturing, or 3D printing, is reshaping the manufacturing landscape by allowing for more creative designs, rapid prototyping, and the production of complex shapes with reduced material waste. Aerospace giant Airbus and medical technology company Stryker are just two examples of how industries are leveraging 3D printing to manufacture lightweight components and personalized medical implants, showcasing the versatility and efficiency of this technology.

The Role of Robotics: From Automation to Collaboration

The integration of advanced robotics, including collaborative robots (cobots) and autonomous mobile robots (AMRs), is enhancing productivity and operational efficiency. These technologies not only automate repetitive tasks but also work alongside human operators, improving safety and reducing the physical strain on workers. Universal Robots and Festo’s BionicANTs demonstrate the advancements in robotic technology, highlighting how automation and collaboration are key to the future of manufacturing.

Edge Computing: Processing at the Source

Edge computing addresses the unique challenges of smart manufacturing by processing data near its source, reducing latency and improving real-time decision-making. Intel’s edge computing solutions exemplify how this technology optimizes industrial processes, underscoring the importance of real-time insights in enhancing productivity and product quality.

Virtual Modeling: The Power of Digital Twins

Digital twins offer a virtual representation of physical systems, allowing manufacturers to simulate, analyze, and optimize products and processes in a risk-free environment. Siemens’ use of digital twins to refine products and processes virtually highlights the potential of this technology to reduce time to market and improve product quality across various sectors.

The Backbone of Connectivity: 5G and Blockchain

The introduction of 5G connectivity is set to revolutionize manufacturing with ultra-fast, reliable, and low-latency communication, essential for real-time monitoring and control. Ericsson’s collaboration with Audi on 5G applications in manufacturing showcases the potential of this technology to enhance connectivity and operational efficiency. Simultaneously, blockchain technology is emerging as a key player in supply chain management, offering transparency and security in the tracking of materials from source to store, as demonstrated by companies like De Beers and Walmart.

Precision and Control: Computer Numerical Control (CNC)

Computer Numerical Control (CNC) technology brings unparalleled precision and consistency to manufacturing processes. Through automated control of machining tools, CNC machining allows for the production of complex parts with high accuracy, as seen in the operations of Haas Automation. This technology exemplifies the shift towards more precise, efficient, and customizable manufacturing solutions.

As we delve into these essential technologies, it’s clear that the future of manufacturing lies in the integration of digital and physical processes. From IoT and AI to AR/VR, 3D printing, and beyond, these technologies are not just reshaping manufacturing processes; they’re laying the groundwork for a more efficient, sustainable, and innovative industry. The journey towards smart manufacturing is a collaborative endeavor, requiring a fusion of technology, vision, and strategic implementation to unlock the full potential of this industrial revolution.

The Future of Smart Manufacturing: Trends Transforming the Industrial Landscape

The industrial sector is on the brink of a transformative era, propelled by advancements in smart manufacturing. This revolution is not merely technological; it signifies a shift towards more efficient, customizable, and sustainable production methods. As we navigate through this evolution, several key trends stand out, promising to redefine manufacturing as we know it.

Hyper-Automation: Elevating Efficiency

Hyper-automation extends beyond automating simple tasks, encompassing complex decision-making processes through the synergy of AI, machine learning, robotic process automation (RPA), and other technologies. This trend is set to automate production schedules, negotiate with suppliers in real-time, and dynamically adjust product designs, enhancing efficiency and agility while enabling human workers to concentrate on strategic and creative tasks.

Mass Customization: The New Production Paradigm

The concept of mass customization represents a shift from traditional mass production to the economical production of highly customized goods. This approach, facilitated by flexible manufacturing lines and AI, allows for personalized products, such as custom-designed sneakers, to be produced at scale and delivered rapidly, democratizing personalized products and transforming the manufacturing model.

From Predictive to Prescriptive Analytics

The evolution from predictive to prescriptive analytics marks a significant advancement. AI systems will not only predict future scenarios but also recommend the best course of action. For example, AI could identify a machine’s developing fault and proactively suggest maintenance, thereby minimizing downtime and shifting manufacturers from a reactive to a proactive stance.

Digital Twins: Bridging Digital and Physical

Digital twins are evolving to become more tightly integrated with their physical counterparts, receiving real-time data and influencing real-world assets. This integration enables manufacturers to test new product introductions in a virtual environment and make adjustments without risk, blurring the lines between digital simulation and physical execution.

Sustainability: A Core Manufacturing Focus

Sustainability is becoming a central focus of smart manufacturing, driven by AI optimization, sensors, and digital twins. Real-time monitoring of energy consumption and predictive supply chain models will minimize environmental impact, responding to increasing consumer and regulatory pressures for sustainable practices.

Emerging Trends Shaping the Future

- Human-Robot Collaboration: Collaborative robots (cobots) are enhancing human capabilities, leading to safer and more productive manufacturing environments.

- Hybrid Manufacturing: The integration of traditional and modern manufacturing methods offers the best of both worlds, combining reliability with innovation.

- Sustainable Manufacturing: Green manufacturing practices are becoming standard, focusing on energy efficiency, resource conservation, and waste reduction to meet environmental and consumer demands.

- Customization and Personalization: Advances in technology are enabling manufacturers to meet the growing demand for customized products, transforming customer engagement.

- Cybersecurity in Manufacturing: As manufacturing becomes more interconnected, robust cybersecurity measures are essential to protect sensitive data and ensure operational integrity.

- Supply Chain Innovation: Technologies like blockchain and IoT are revolutionizing supply chain management, enhancing transparency, efficiency, and resilience.

- Impact on Remote Work: Smart manufacturing technologies are enabling a more flexible and distributed workforce, with remote monitoring and management becoming increasingly feasible.

As we delve into these trends, it’s clear that the future of manufacturing is not just about adopting new technologies but about reimagining how products are designed, produced, and delivered. This new era of smart manufacturing promises to bring about a more efficient, sustainable, and customizable industrial landscape, reshaping the global economy and setting new standards for innovation and excellence.

Embarking on the journey towards smart manufacturing can seem daunting, but it doesn’t have to be. Whether you’re looking to harness the power of IoT, leverage AI and machine learning for predictive analytics, or integrate advanced robotics and digital twins into your operations, Zeren Software is your ideal partner. Our expertise spans the full spectrum of smart manufacturing technologies, enabling businesses to optimize their production processes, enhance efficiency, and achieve sustainability goals. Discover how our solutions can transform your manufacturing operations. Let’s redefine the future of industry together. Contact Zeren Software today, and let’s discuss how we can tailor our expertise to meet your unique needs in this dynamic and exciting field.